Turning Capital into Knowledge

We are living through one of those rare moments when technology doesn’t just improve—it transforms. Consumer-ready artificial intelligence arrived in late 2023 with remarkable speed and impact. In such a short time, we’ve seen AI systems pass the bar exam, diagnose rare diseases from medical images, write functional computer code in seconds, translate between languages in real-time with nuance once thought impossible, and generate photorealistic images from simple text descriptions. Students use it to learn, doctors to diagnose, engineers to design, and writers to draft. What once seemed like science fiction has become as mundane as checking email.

But we’ve been here before. The results are impressive, yet extrapolating recent growth into an unbroken trajectory risks serious misallocation of capital and a painful day of reckoning. We believe deeply in the transformative power of AI. Still, we also remember the dotcom era intimately and have studied past technological waves, when the assumption that investments could only go up and growth could only accelerate led investors to overlook an inconvenient truth: the path to Nirvana is rarely smooth. Many of the right bets were made in 1999—on the internet, on e-commerce, on digital transformation—but the timing, winners, valuation, and assumption of frictionless scale proved catastrophically wrong for many start-ups and investors.

This transition to an AI-powered economy is being built at enormous scale through some of the largest capital deployments in modern history. For example, in the first half of 2025, a Harvard study estimates that AI investments accounted for 92% of all U.S. investments, contributing 1.1% to U.S. GDP.

Behind every conversation with ChatGPT, every AI-generated image, every smart assistant response, lies an infrastructure requiring billions of dollars, millions of processors, and—increasingly—gigawatts of electrical power. The sheer magnitude of this investment is exciting to witness. Still, it also demands that we pause and ask a critical question: Will the supply being built at breakneck speed actually meet the insatiable demand investors are pricing in, or are we once again building ahead of the curve?

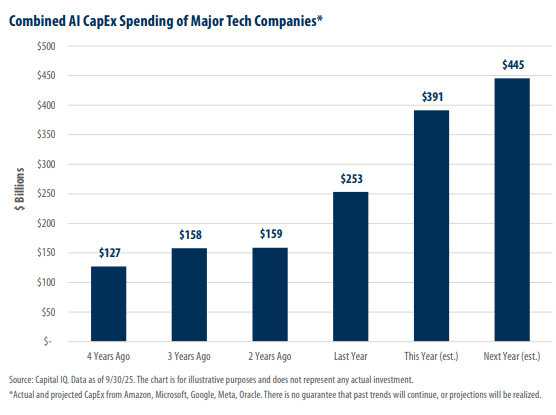

The Spending Boom

The world’s largest technology firms are investing at a rate once reserved for oil majors, telecommunication companies, and electric utilities. The goal is simple: feed artificial intelligence models that convert electricity into language/knowledge.

The numbers are staggering:

- Microsoft expects $50–55 billion in capital expenditures for fiscal 2025, nearly all directed to AI data centers and Nvidia hardware

- Amazon Web Services plans roughly $60 billion across 2024–2025 to expand its global cloud infrastructure

- Alphabet (Google) lifted its 2025 capex forecast to $48 billion, emphasizing AI accelerators and new data-center construction

- Meta raised its infrastructure guidance to $35–40 billion for 2025 as it retrofits older sites for dense AI racks

Collectively, these tech giants are on pace to exceed $250 billion in spending next year, which is roughly equal to the size of the entire semiconductor industry a decade ago. [Note that these figures have been increased in just the last day.]

These investments have propelled the share prices of companies tied to the AI supply chain—chipmakers, cooling system providers, and grid equipment manufacturers—by triple digits. But the deeper question remains: what is all this capital actually building?

A Quick Guide to the Numbers

To understand where this money is going and what it is empowering, here are a few terms worth knowing:

- Gigawatt (GW): A measure of continuous power equal to one billion watts—enough to light roughly ten million LED bulbs, or power about one million homes

- Token: The smallest chunk of text an AI model processes; think of it as about three-quarters of an English word or one syllable

- Training vs. Inference: Training is the one-time process of teaching a model (think: weeks of intensive computing). Inference is the everyday work—answering your questions, writing text, and analyzing data.

One more note: because we’re dealing with truly massive numbers, we’ll occasionally use scientific notation. To refresh your high school math: 103 = 1 thousand, 106 = 1 million, 109 = 1 billion, and let’s jump to 1018, which equals 1 thousand quadrillion. To put the last number into perspective, the U.S. debt of $38 trillion is only 3.8% of a quadrillion.

From Power Plants to Printing Presses

When people picture data centers, they often imagine buildings filled with servers and blinking lights. In reality, they are modern power plants—not generating electricity but consuming it on an industrial scale to turn electrons into knowledge (the industry has moved from calling them data centers to now referring to them as knowledge factories).

If current projections hold, global data-center demand could exceed 100 gigawatts of continuous power by the end of this decade, more than double the industry’s current energy usage. To put that in perspective: that’s roughly the electricity used by 100 million U.S. homes running at once.

About half of that energy, some 50 GW, is expected to go toward AI inference. Inference is what happens every time a model answers a question, writes a paragraph, or generates an image. Training a model may take weeks; inference never stops. It is the daily heartbeat of the AI economy.

So let’s follow that energy and see where it leads.

The Journey from Electricity to Language

This is where we need to use scientific notation, given the size of the numbers. Your eyes may blur, but stick with it!

Starting point: Energy per token

At current efficiency levels, processing each token uses about 0.001 watt-hours of energy. That’s tiny—but remember, this happens billions of times per second across all these data centers.

Step 1: How much total energy?

Fifty gigawatts running continuously for a year gives us a massive pool of energy. The math:

- 50 billion watts × 24 hours × 365 days = about 438 billion kilowatt-hours per year

- After accounting for infrastructure losses, we’re left with roughly 470 trillion watt-hours of usable energy

Step 2: Energy to tokens

Now divide that energy by the cost per token:

- 470 trillion watt-hours ÷ 0.001 watt-hours per token = 470 quadrillion tokens per year, or 470 x1018 tokens in a year.

If your eyes are glazing over, here’s what that number means in human terms:

In words:

Since each token is about three-quarters of a word, we’re talking about roughly 350 quadrillion words per year. That’s enough to rewrite every book ever published—thousands of times over—every single year.

In conversations:

A typical back-and-forth with ChatGPT uses about 200 tokens (your question plus its answer). At this scale, we’re talking about 2.4 trillion conversations per day—or roughly 1,000 AI interactions for every person on Earth, every single day.

In newsletters:

In writing this newsletter, about 25,000 tokens were used to help investigate the data and edit the resulting newsletter. If the planned infrastructure were limited to newsletters, the capacity could generate 1 million newsletters per second.

In books:

A full-length novel contains about 80,000 words (or roughly 100,000 tokens). These data centers could theoretically generate 4.7 million books per year for every person alive today. That’s around 13 billion new books every single day—more than humanity has written in its entire history, produced daily.

In video:

The examples above result in a staggering amount of output. Video may be the one area that shows how the power assumptions may not be enough. With the assumptions above, the output is equivalent to 400,000 hours of HD video each year. A lot, but not extreme.

A note on uncertainty

The math you’ve just seen is meant to show scale, not predict the future. In the last two years, some LLMs have squeezed ~10× more tokens per watt. We assume that speed won’t continue forever—but we can’t know. New software tricks or memory designs could slash the cost of keeping conversation context; bigger models and longer prompts could raise it. Because we can’t estimate these forces with confidence, we leave them out—while acknowledging they could move the results by multiples, either way.

Investment Perspective

From an investor’s standpoint, this is a paradoxical moment. The capital intensity of AI mirrors that of the early industrial era: enormous upfront costs, multiple large players competing for dominance, and exciting thoughts of the future but with an uncertain long-term payoff. While energy and infrastructure demand are undeniably secular, suppliers’ valuations may already assume an endless boom.

History shows that every transformative technology—from railroads to fiber optics—eventually meets the limits of physics, financing, or both.

At Auour, we see this as a boom with localized bubbles. The global appetite for computation and energy is durable. But the belief that every dollar of capex will compound without friction is not.

The key distinction for investors is this: Are you betting on electricity—the real, structural demand that will persist for decades? Or are you betting on euphoria, which tends to burn brighter and fade faster?

At Auour, we don’t try to predict the exact path of progress—but we do prepare for its uneven rhythm. Our process is built to separate structural shifts from temporary enthusiasm, identifying when optimism turns to overextension and when fear gives rise to opportunity. Regime-based investing helps us navigate cycles like this one—where innovation is real, but valuations and expectations may not move in tandem. In every era of transformation, our aim remains the same: to participate intelligently in growth while protecting against the excesses that too often follow it.

To that end, we have made some adjustments to our portfolios, moving tactical cash down to 10% and reducing our exposure to the largest U.S. companies. We have been discussing the eventual need to broaden our exposure to international markets and the sectors of the economy that can benefit from advances in AI. This week, we took a step in that direction.

[…] Massive infrastructure underpinning the hypeThe video emphasises that what underlies AI is enormous capital investment: data‐centres, chips, power, cooling — the “hardware” and infrastructure aspects — more so than a clean sweep of algorithmic breakthroughs. This resonates with independent analyses of AI infrastructure spending. (auour.com) […]